训练优化

- Scaling Distributed Machine Learning with the Parameter Server

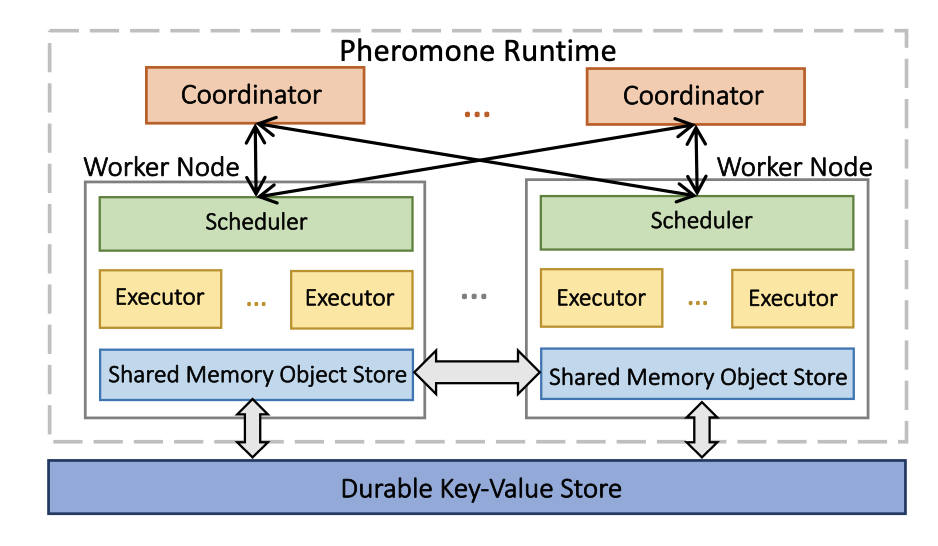

- Ray: A Distributed Framework for Emerging AI Applications

- PipeDream: Generalized Pipeline Parallelism for DNN Training

- Scaling Distributed Machine Learning with In-Network Aggregation (NSDI’21) [PDF]

- 微软的工作,提出Switch-ML:在switch中更新模型

- Accordion: Adaptive Gradient Communication via Critical Learning Regime Identification

- Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism (ArXiv’19) [PDF] [Code]

- NVIDIA的工作,提出了对于Transformer的结构来说效率最高的模型划分方式

- Adaptive Gradient Communication via Critical Learning Regime Identification (MLSys’21) [PDF]

- SCALING DISTRIBUTED TRAINING WITH ADAPTIVE SUMMATION (MLSys’21) [PDF]

- 这篇文章主要提出了Adasum(adaptive summation),是一种能够在分布式数据并行训练的minibatch很大的时候时提高convergence的技术,这样能够进一步提高并行度,达到更高的系统效率

- 其本质是多个较小的miniatch更新来近似一个较大的minibatch更新。

- PipeMare: Asynchronous Pipeline Parallel DNN Training (MLSys’21) [PDF]

- PatrickStar: Parallel Training of Pre-trained Models via a Chunk-based Memory Management (arXiv’21) [PDF]

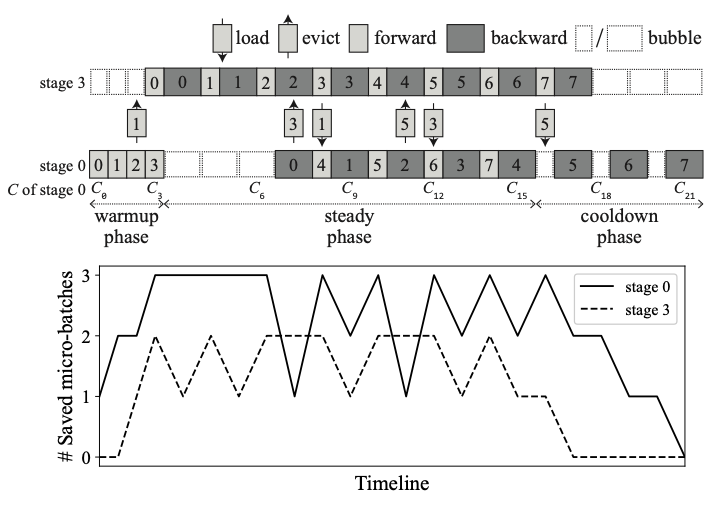

- Dapple (PPoPP’21)

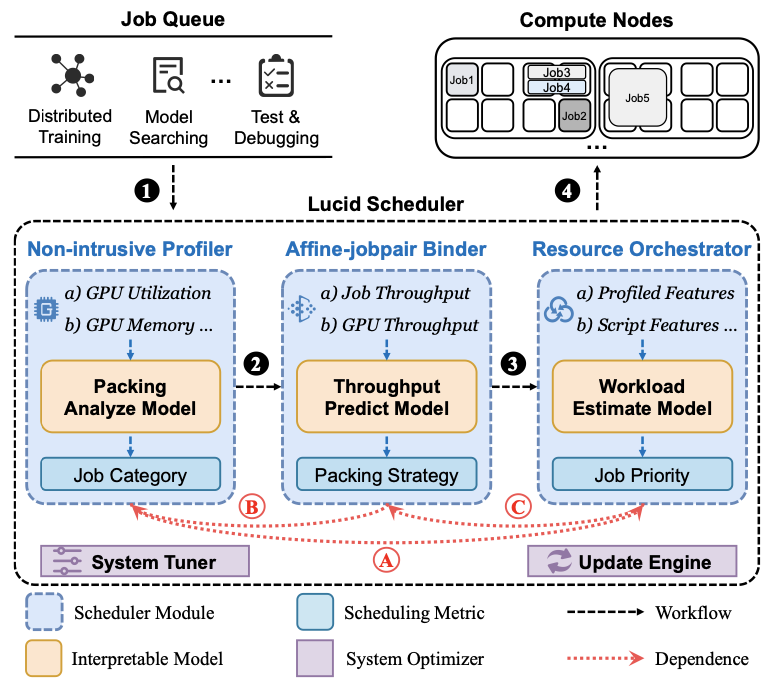

- Varuna: Scalable, Low-cost Training of Massive Deep Learning Models (EuroSys’22) [PDF Code]

- MSR和CMU合作的论文,稍微改进了一点流水线并行的模型划分问题,考虑了多GPU分布式训练的场景,并且可以在多个 GPU上并行进行提前的profile。

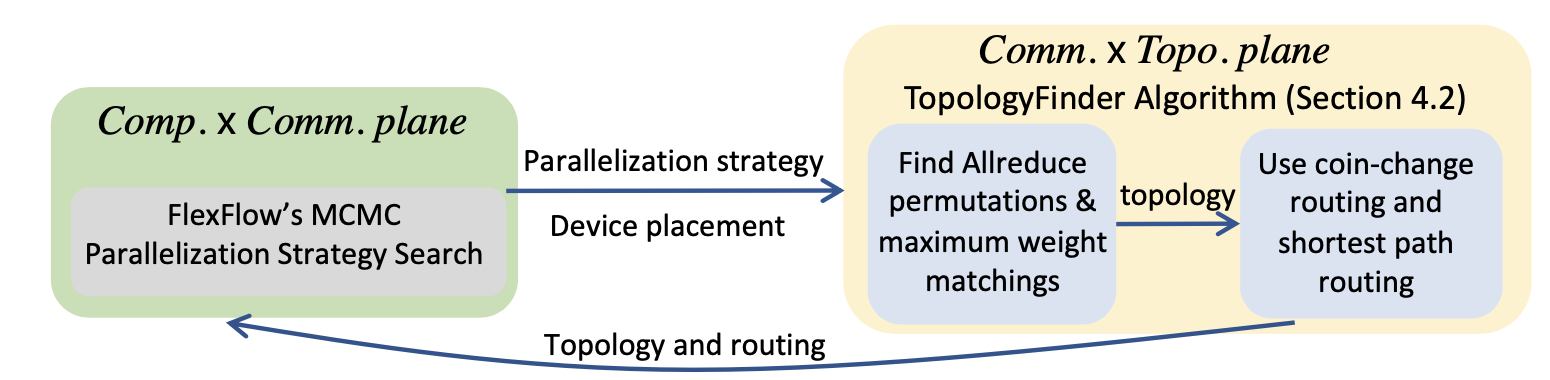

- Alpa: Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning (OSDI’22) [PDF]

- 本工作来自UC Berkeley的RISE Lab,尝试为深度学习应用自动寻找最优的模型并行方案。

- Unity: Accelerating DNN Training Through Joint Optimization of Algebraic Transformations and Parallelization (OSDI’22) [PDF]

- DNN逐渐变得越来越大,计算的成本也越来越昂贵。为了加速训练,代数变换和并行作为两个优化的角度带来了显著的性能提升。Unity引入并行计算图(parallel computation graph),所有的并行策略都可以被PCG表达。

- ZeRO++: Extremely Efficient Collective Communication for Giant Model Training (ArXiv’23) [PDF]

- 用三个technique优化某些场景下ZeRO的通信bottleneck

- blocked quantization可以在减少通信量的同时较小地损失精度,缓解因为量化带来的准确率损失的问题

通信优化

- Blink: Fast and Generic Collectives for Distributed ML (MLSys’20) [PDF]

- ATP: In-network Aggregation for Multi-tenant Learning (NSDI’21 Best Paper) [PDF] [Code]

- 清华大学吴文斐助理教授研究组与威斯康星大学麦迪逊分校Aditya Akella教授研究组合作,刘俊林和Yanfang Le为共同一作

- 根据网络拥塞情况,APT选择在switch上或者PS上aggregation执行等计算。考虑了多租户场景下的竞争策略,重新设计了丢包恢复和拥塞控制算法,与微软的Switch-ML有相似之处

- PET: Optimizing Tensor Programs with Partially Equivalent Transformations and Automated Corrections (OSDI’21) [PDF]

- Exploring Multi-dimensional Hierarchical Network Topologies for Efficient Distributed Training of Trillion Parameter DL Models (ArXiv’21) [PDF]

- 主要解决的问题是:NPU上分布式训练中,如何在不同维度之间进行贷款的分配效率最高。论文对三种方案的结果进行了测量。

性能预测与建模

- Pollux

- Proteus: Simulating the Performance of Distributed DNN Training (arXiv’23) [PDF]

- 把子图和算子间的并行用树表示,把计算过程编译出来,对性能表现建模,用profiler记录没有op之间overlap的计算性能profile下来之后,预测建模里的参数。

- DistSim: A performance model of large-scale hybrid distributed DNN training (CF’23) [PDF]

- data, model, pipeline paprallelism维度

评论